How to Make AI Sentient in Unity, Part I

9th October 2021 • 13 min read

Today, I'd like to show you one way to grant sentience to your AI agent in Unity so it can T-800 the world…

Ok, not really. However, I'm going to guide you through an implementation of eyes and ears you can use for your NPCs, so they will be able to react when they see or hear a specific object in a scene, which is also pretty cool, right? :-)

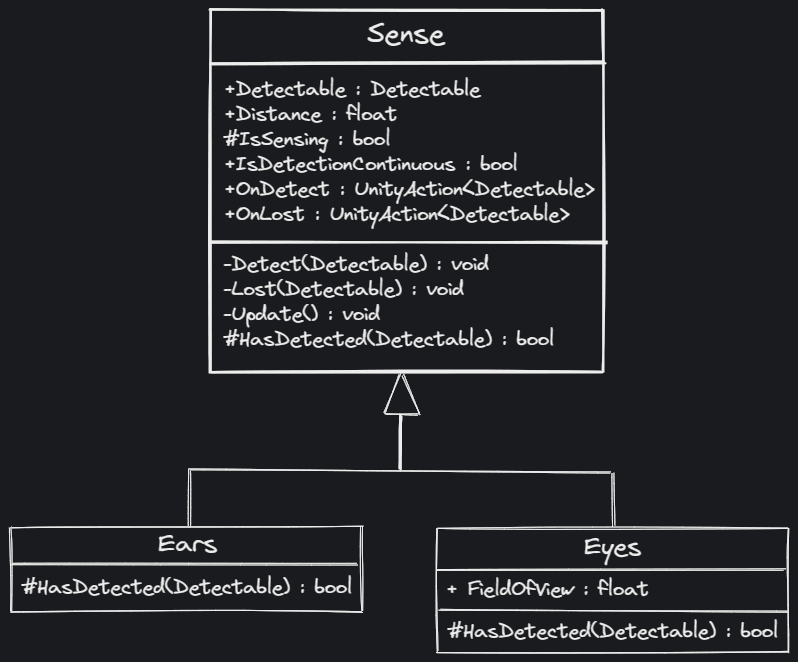

In Part I, we're going to see the base class Sense, and Eyes and Ears that inherit from it. We're also going to see how to take advantage of UnityAction to keep the implementations of senses and reactions nicely decoupled.

In the subsequent and final Part II, we're going to dive into the implementation of some actual reactive AI behavior and find out how to harness the power of a simple State pattern, which is enough if we don't plan any complex AI.

If you do plan to have a game with more complex AI behavior, look for behavior trees, Goal Oriented Action Planning (GOAP), or Hierarchical Task Network (HTN).

I assume you have at least a basic understanding of the Unity engine and C# programming language, though I tried to make this tutorial reasonably beginner-friendly. Feedback is always welcome.

Before we continue, I encourage you to get the final example project from GitHub and open it in Unity 2020.3.17f1, so you can see the parts of code I'll be describing in its context.

So if you're ready, without further ado, let's get started 🚀.

Detectable and PlayerController components

First, we don't want our AI to uncontrollably react to every GameObject in the scene, so we need to mark only the Player as detectable.

It can be achieved with Tags, for instance, but marking objects by adding a custom component gives us the possibility to store and pass some useful data.

In this example, it's the flag CanBeHear that is set by PlayerController only when a player is moving and unset when the player stays still. You can see in the hierarchy that both Detectable and PlayerController are attached to the Player object.

public class Detectable : MonoBehaviour

{

public bool CanBeHear;

}

Detectable is a component that marks an object as detectable and provides data related to detection.

As for the PlayerController, I'm not going to describe its implementation in much detail; it's very basic and not what we're focusing on in this post.

But notice how we set detectable.CanBeHear to true only if the verticalAxis is bigger than zero, in other words, only when the player is moving.

This player controller depends on the Detectable component, so it's a good practice to decorate the class with the RequireComponent attribute, just as you can see on the first line below.

[RequireComponent(typeof(Detectable))]

public class PlayerController : MonoBehaviour

{

public float MoveSpeed = 6f;

public float RotationSpeed = 100f;

private Detectable detectable;

private void Start()

{

detectable = GetComponent<Detectable>();

}

private void Update()

{

float verticalAxis = Input.GetAxis("Vertical");

float horizontalAxis = Input.GetAxis("Horizontal");

detectable.CanBeHear = verticalAxis > 0f;

transform.Translate(Vector3.forward * verticalAxis * MoveSpeed * Time.deltaTime, Space.Self);

transform.Rotate(Vector3.up * horizontalAxis * RotationSpeed * Time.deltaTime);

}

}

If you add a component with RequireComponent attributes, all components that are required will be added automatically. It also prevents you from accidentally removing components that other components depend on.

Senses Base Class

Before we talk about Eyes and Ears, let's point out that although they need to have a slightly different implementation, they both can share a fair bit of common logic.

That's a good place for using inheritance. You might have heard that you should always favour composition over inheritance and that some modern languages don't even have inheritance.

But the short-sighted conclusion "inheritance is bad" is just wrong. Inheritance represents *is a relationship/ like a cat is an animal, or in our case, eyes and ears are senses, while composition represents *has a relationship*, like a *car has an engine*, or in Unity, a GameObject can have MeshRenderer, Rigidbody, Collider, and many other components.

In fact, in relation to GameObject, our Eyes and Ears are also components, and Sense is a MonoBehavior, which makes Eyes and Ears also MonoBehavior.

Both inheritance and composition are useful concepts of OOP, and both are often used together. When used right, they are very powerful when misused they both can lead to an unmanageable mess. But let's get back to the original topic.

In the Sense class implementation below, notice how we have no logic in the protected virtual HasDetected method. Because we won't be attaching to any GameObject, the base class itself, but its child classes Eyes and Ears, which overwrites this method with their own logic as we'll soon see.

The usefulness of the IsDetectionContinuous flag we'll see in Part II, in context with behavior. For now, just note that on Ears it's set to true and on Eyes, it's set to false. Ears and Eyes, with respective components, are in the scene hierarchy child objects of the Enemy.

using UnityEngine;

using UnityEngine.Events;

public class Sense : MonoBehaviour

{

public Detectable Detectable;

public float Distance;

protected bool IsSensing;

public bool IsDetectionContinuous = true;

public UnityAction<Detectable> OnDetect;

public UnityAction<Detectable> OnLost;

private void Detect(Detectable detectable)

{

IsSensing = true;

OnDetect?.Invoke(detectable);

}

private void Lost(Detectable detectable)

{

IsSensing = false;

OnLost?.Invoke(detectable);

}

void Update()

{

if (IsSensing)

{

if (!HasDetected(Detectable))

{

Lost(Detectable);

return;

}

if(IsDetectionContinuous)

{

Detect(Detectable);

}

}

else

{

if (!HasDetected(Detectable))

return;

Detect(Detectable);

}

}

protected virtual bool HasDetected(Detectable detectable) => false;

}

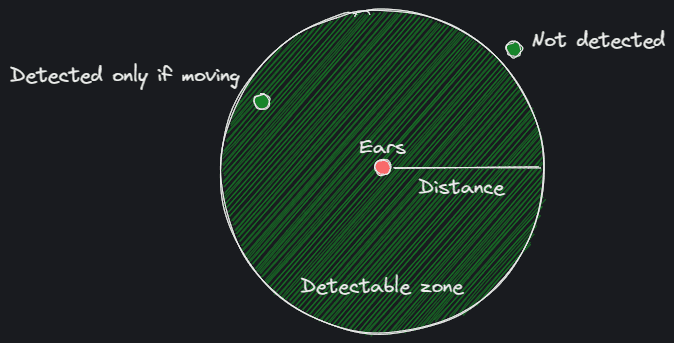

Ears Component

With Sense as a base class, the Ears class derived from it is actually pretty simple; all it needs is to provide overwrite for the HasDetected method where true is returned when detectable is within the Distance and its flag CanBeHear is set.

public class Ears : Sense

{

protected override bool HasDetected(Detectable detectable)

{

return Vector3.Distance(detectable.transform.position, transform.position) <= Distance && detectable.CanBeHear;

}

}

With the Distance function provided by UnityEngine.Vector3 struct our implementation of detection for ears is just a single line of code.

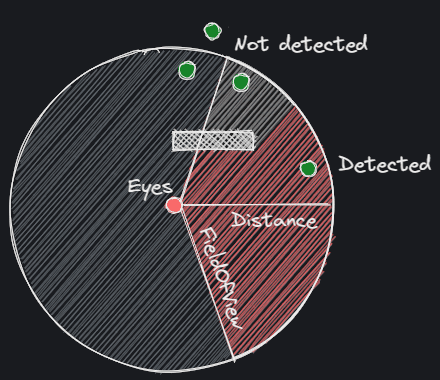

Eyes Component

As you probably already guess, the implementation of the Eyes class is not a one-liner. However, it's still quite simple. The detectable zone just needs to be determined not only by distance but also by the field of view.

When a detectable object is inside the zone, the Eyes component needs to perform just one extra test to find out whether the object is occluded or not.

using UnityEngine;

public class Eyes : Sense

{

[Range(0,180)]

public float FieldOfView;

private float FieldOfViewDot;

void Start()

{

FieldOfViewDot = 1 - Remap(FieldOfView * 0.5f, 0, 90, 0, 1f);

}

private float Remap(float value, float originalStart, float originalEnd, float targetStart, float targetEnd)

{

return targetStart + (value - originalStart) * (targetEnd - targetStart) / (originalEnd - originalStart);

}

protected override bool HasDetected(Detectable detectable)

{

return IsInVisibleArea(detectable) && IsNotOccluded(detectable);

}

private bool IsInVisibleArea(Detectable detectable)

{

float distance = Vector3.Distance(detectable.transform.position,

return distance <= Distance && Vector3.Dot(Direction(detectable.transform.position, this.transform.position), this.transform.forward) >= FieldOfViewDot;

}

private Vector3 Direction(Vector3 from, Vector3 to)

{

return (from - to).normalized;

}

private bool IsNotOccluded(Detectable detectable)

{

if (Physics.Raycast(transform.position, detectable.transform.position - transform.position, out RaycastHit hit, Distance))

{

return hit.collider.gameObject.Equals(detectable.gameObject);

}

return false;

}

}

When you have compound conditions like this with && between them, sort them so they start from left with the simplest one. This way you'll save some cycles, because, since the first false condition, the others don't matter and won't be executed.

As you can see, the HasDetected method is still a one-liner, but both IsInVisibleArea and IsNotOccluded methods need a little bit more sophisticated logic.

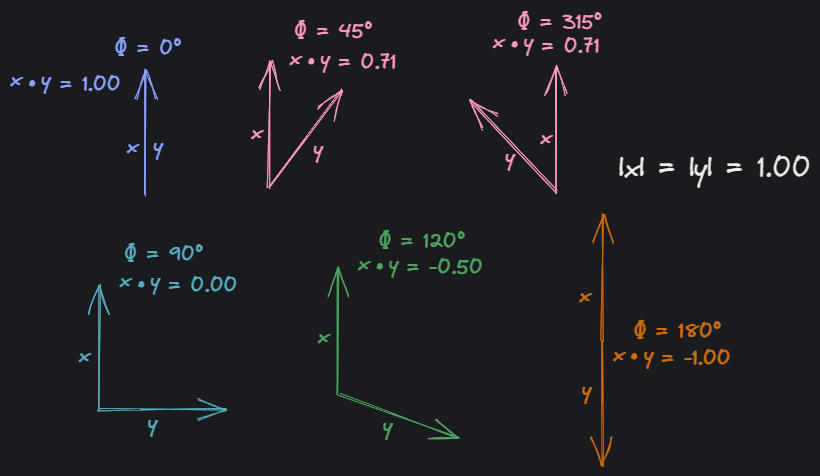

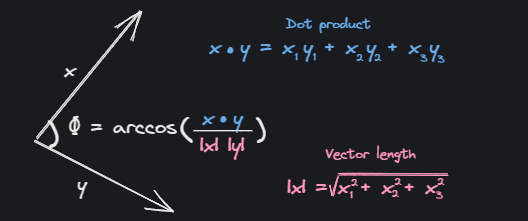

In the IsInVisibleArea method, we have the same test as in the Ears component to find out whether a detectable is in the visible distance, and we also test if the detectable is inside the field of view.

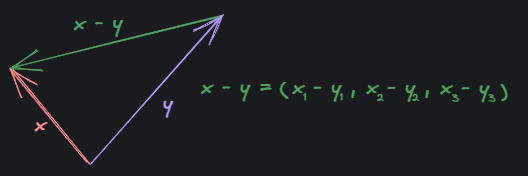

To figure it out, we need direction between detectable and eyes, which we get simply by vector subtraction. Then we calculate the dot product between the normalized direction and the forward vector of Eyes (which is also a unit vector).

Finally, we compare the dot product with FieldOfViewDot, which we set in the Start method as one minus half of the FieldOfView remapped from the original range to a range from 0 to 1. If it's bigger than or equal to, detectable is inside the field of view.

The dot product is particularly useful in this case. Since we don't need to care whether the detectable is on the left or on the right side, there's no need to calculate the actual angle between two vectors.

Of course, we can set FieldOfView directly in the range between 0 and 1, get rid of FieldOfViewDot and cut out the value remapping, but it's much more intuitive to think about FOV in terms of angles.

Exposing values in human-friendly units while internally using different ones for better performance is a common practice, often seen also with angles and radians.

Unity has us covered here, once again with Vector3.Distance and also with Vector3.Dot methods. There's also the Vector3.Angle method for cases when the dot product is not enough. That's nice, even though the math behind it is relatively basic. I'm including it here as a little bonus.

As mentioned before, in the last test Eyes component checks if the detectable is occluded. This is implemented in the IsNotOccluded method using Physics.Raycast.

X-Ray vision, invisibility, glass, more detectable objects, etc.

Before we wrap up Part I, let's briefly talk about some edge cases, limitations, and extra features.

To tackle invisibility, you can add another member next to CanBeHear in the Detectable component and, in the Eyes component, perform a similar check as you've seen in the Ears component.

For X-Ray vision, the simplest approach would be to add a Boolean variable to the Eyes component, something like HasXRay, and skip the IsNotOccluded method call when the flag is set.

If you have transparent objects in your scene, like glass doors or windows, you can simply add them to the Ignore Raycast layer.

Having more than one detectable object would be a bit tricky, but one reasonable approach would be, instead of adding complexity to senses, creating another layer that would provide a detectable object from a pool of detectables at runtime. This way, there will still be only one detectable associated with a set of senses at a time, and the implementation of senses would stay intact.

That's it for today. I hope you've enjoyed it. If so, stay tuned for Part II, where we're going to dissect the implementation of an AI controller and behaviours like patrol between points, chase player, and investigate location.